CATCH AND RELEASE

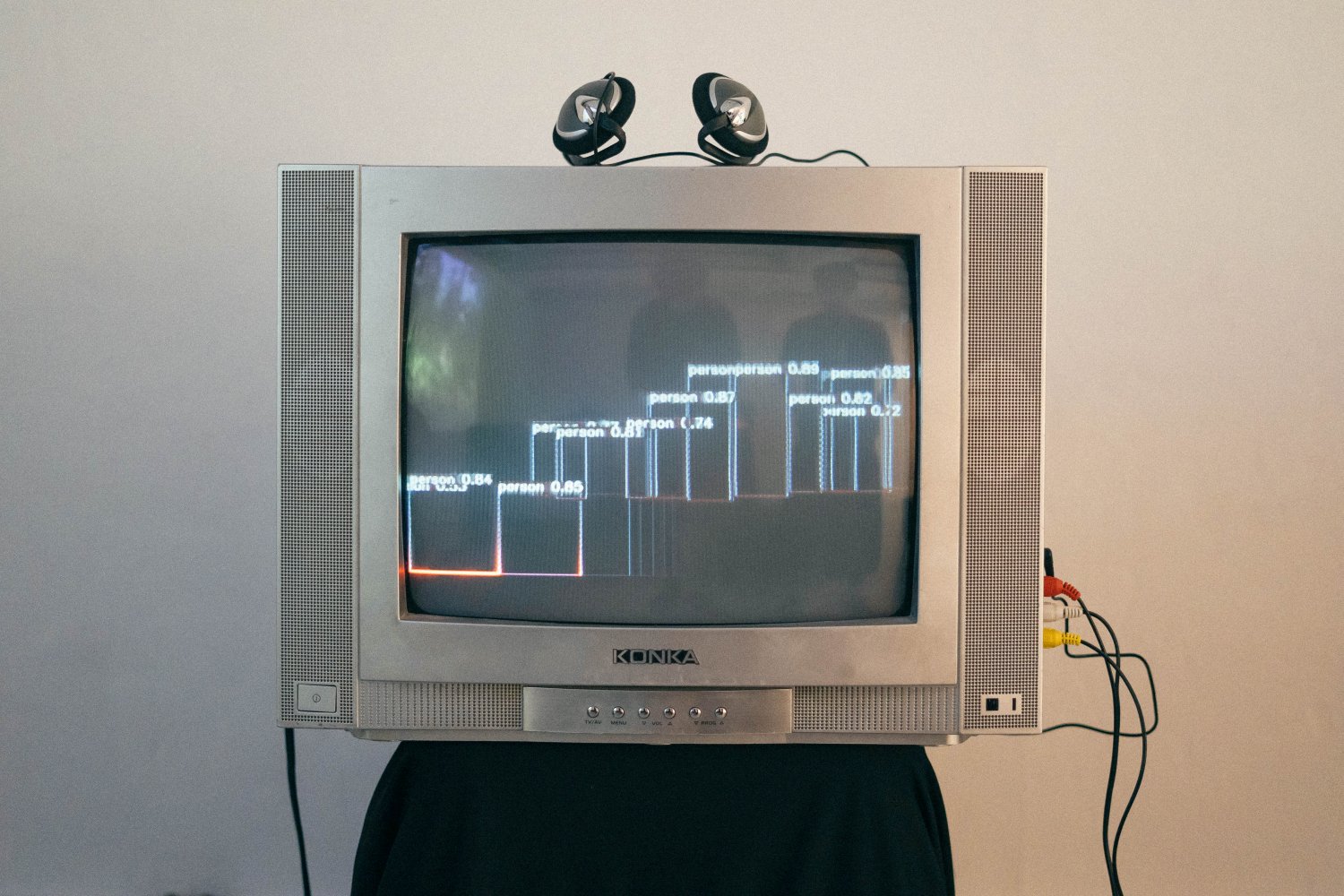

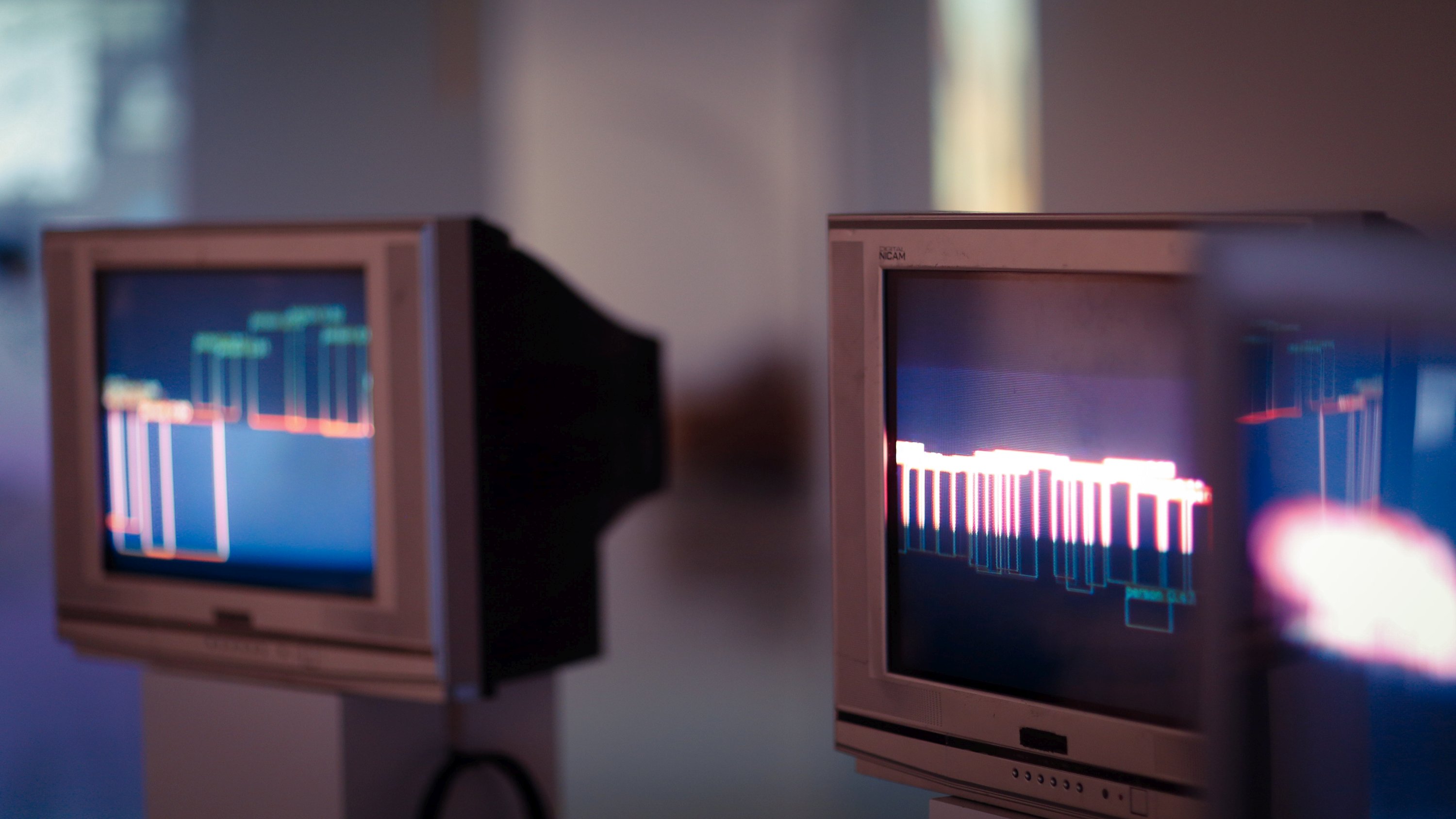

Video, dimension variable; 2023; 4x CRT TVs.

"The limits of my language means the limits of my world."

- Tractatus Logico-Philosophicus

by Ludwig Wittgenstein

The way we imagine machines see us is imbued with our own biased perceptions and expectations, and nothing like the way machines actually see us. In this work, I use the medium of dance to show how machines can digest the dynamic forms of human bodies in different genres of music-driven movement. As we marvel at the patterns detected by computer vision, free of the human way of looking, we begin to understand how to see the world the way machines do. It isn’t until the end of the dance when we can reunite with our comfortable human visions, but by then the dance is finished, and we wondered what the dancing experience really was like, if we didn’t have to limit ourselves to the lens of the machine. Machine vision can only catch the fleeting forms of the human, and release them when they are no longer detected. They can see the dynamic patterns, but they can’t see the reality behind the abstractions. Perhaps mere abstractions are enough?

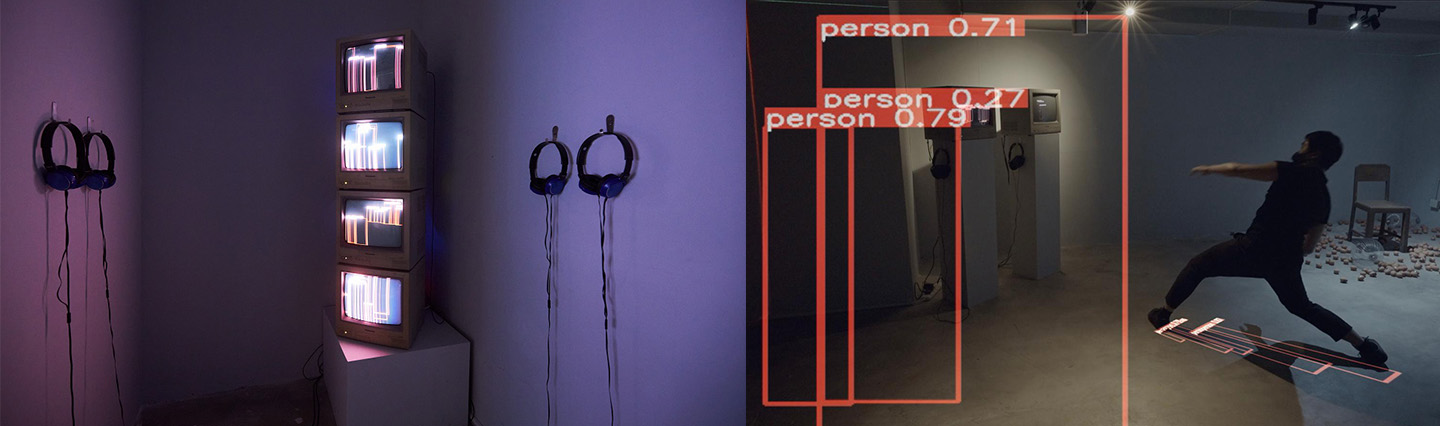

Catch and Release uses the Yolo algorithm to computationally detect human movements in four dance performances shown on four CRT TV’s: Cuban Rueda, Swing, Bollywood, and Salsa. Catch and Release was exhibited at I Was of Three Minds at JCCAC, and as part of I’m Always Here at Osage Gallery Hong Kong. Catch and Release was shown at Eaton Hong Kong’s Tomorrow Maybe Gallery as part of The Jumping Frames Festival: Expanded Space Exhibit.

The way machines see us vs the way we see are selves are flip sides of the same coin. Take a movement-based experience like dance (choreographed by the artist and performed in New York and Tokyo venues), machines see merely patterns and movement, often detecting people where there is none, or seeing inconsequential objects that don’t contribute to our conscious experience. For example, it can see a photographer in the stands who stood up a couple of times, or an usher who arrives just at the end, or two dancers “fused” into one by the algorithm, or a distant face walking up the parking lot in the outdoor venue, etc, whereas humans have the attention to filter them out from their consciousness because they don’t contribute to the dance itself. These quirks abound in the piece itself, where each of the four performances take place in different spaces, with different musical rhythms and contexts.

Just by looking at the machine interpretation of the video itself, we can see patterns of activity that, if well immersed in the medium, gives us a sense of what the machine really perceives: probabilities of an object being a person, and the instantaneous prediction of her presence. The four videos conver a spectrum, including jazz swing, Cuban Rueda, a Bollywood-west coast swing medly, and salsa. When we hear the music, we begin to put the machine side and the human side together, but then our human side begins to yearn to see the real thing, the real performance behind the cloud. At the end, we see only the celebratory ending, a posthoc horay to the human attention process, in trying to see the story that doesn’t exist in the machine, and in the story that isn’t shown to the human. Often we catch the machine’s perspective for a brief moment, and then release it to become human again.

The four videos are each timed exactly 2:10. They each begin only with the computer vision outputs (rectangular drawings of predicted human locations) with probabilities, and each begin revealing the original video at 1:56, before going dark for the final 10 seconds to begin the loop again. They should be shown synchronized on four CRT style TVs. The TV colors can be tuned to show the rectangles at different colors with vintage perspective. The vintage feel of the TVs and video colors make it appear an old analog system, and contrasts with the technical proficiency of the computer vision prediction. The dance forms themselves are analog experiences, and to miss them is to miss the moment forever. Each TV is attached to a head phone, allowing individuals to experience each piece separately. The TVs should also be put in proximity so that there’s a social component of seeing what others are seeing, or potentially seeing others dance to the music. Media players can be setup to loop the videos, and a common power source allows the CRT TVs and videos to be on at the same time with a single switch. The geometric arrangement of the four TVs is up to the gallery. A total of up to 7 videos (including from other performances) are available if more artifacts are desired.

For the Jumping Frames exhibit at Eaton’s Tomorrow Maybe Gallery, Kenneth Hui Ka Chun worked with RAY LC to create a performance whereby Kenneth reacts to the moving forms in physical space as the virtual movements populate both the CRTs and the projected reality in the exhibition space. For this exhibition-performance, each of the dance videos are spread apart in different parts of the gallery, including four stacked on top of each other on the back side, two next to each other in the main gallery space, and one projected onto the performance space where Kenneth danced, allowing audiences to see the movements everywhere as part of a continuous whole, evoking the aspirations of the field of Dance Fusion. During the performance, Kenneth reacted to the different dance forms around him using rhythms of one musical form followed by another, sometimes bringing polyrhythms into his repertoire, all without music, bringing to life the human vision of the machine vision interpretation, forming a loop of human-machine-human perceptions that illustrate our increasingly intricate, layered, and feedback-based interaction with computer vision.