LIGHT UP

video projection, 6x2m; 2022.

"If you want to know how much darkness there is around you,

you must sharpen your eyes, peering at the faint lights in the distance.

Invisible Cities by Italo Calvino

The city we imagine together is our collective we. When we wake, the city comes alive, and when we work, the city is illuminated by signs of activity. How do we see ourselves in this collective striving admist a city in flux? Light Up uses over 4000 photos of Hong Kong in the day and night time to generate a collective moment of the city going into darkness as lights come on, an unreal version of the city from the lens of machine learning. As we look closely from a collective perspective afforded by machine learning, we realize that the ebb and flow of a city is the ups and down of us, as we move, strive, and become enlightened. Programming by Zhiyuan Zhang.

Light Up is a Machine-Learning generated interpolated experience using StyleGANS2 (visuals) and GanSynth (music). Light Up was exhibited at the immersive exhibition Light Up at Goethe Institute, Hong Kong Arts Centre curated by Zoe Chan. It was shown at Mind(e)scape at Soho House Hong Kong curated by Linda Cheung. Light Up was projection mapped onto a 3D cityscape of Hong Kong in M3: Beyond Territories, an exhibition for the HK Institute of Architects shown in Hangzhou, Beijing, New York, and Hong Kong. An extended version of Light Up was shown at Art.Growth, 16th Hangzhou Culture and Creative Expo as City Lights.

Light Up has been shown in multiple forms reflecting its diverse perspectives. For Soho House, it was a set of CRT TVs synchronized to show a double vision of the city’s transition from daylight to nightlight without sound. For Hong Kong Arts Centre, sound generated from the music of Bach fed into GANSynth allowed us to create three unique musical pieces from the same midi tunes. These unique timbres generated by Machine Learning were designed to have three types of voices: sustained, plucked sounds, and symphonic sounds. When played together they appear as a symphony that uniquely changes timbre within each of its voices over time. For the Goethe Institute installation, three versions of the video that began from the same frame and interpolated through different paths were produced: one purely in the daytime, one in the nighttime, one that transitions from daytime to nighttime. These three videos are matched to the three voices of the ML-generated music, so that the symphony produced by the three versions have both visual and auditory harmony. The visual symphony comes from different ways of interpolating the city; the musical symphony comes from the timbre of the mixed voices. Indeed it is a lighting up of multiple collective perspectives as seen by machine learning.

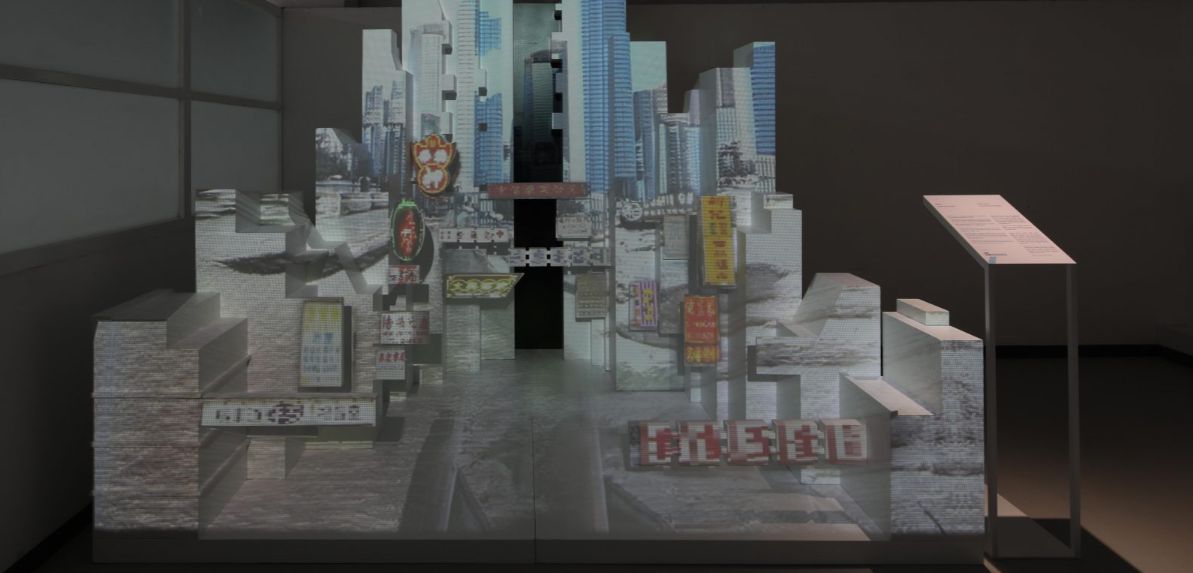

For M3, shown internationally at Beijing, Hangzhou, New York, and Hong Kong, Light Up was immersively shown as projection mapped onto a 3D constructed landscape by Paul Shepherd and LCM Leung. The 3D construction in various forms include a large scale structure, an AR experience, and a smaller scale detailed model with land-mark signs of Hong Kong. These alternative visions of Light Up capture the evolving nature of the lighting up process as the Hong Kong architectural environment comes to life in each of these other exhibition locations.

For the Hangzhou Culture and Creative Expo, the work was embedded at the center of an exhibition themed around the growth of the arts within cities. The 3 panels are intentionally unbalanced, with the center work up on a different height showing the floating connector between the left (daytime) and the right (nighttime) in synchrony. The fusion of two sides of a city is the real growth.