SOUND OF(F)

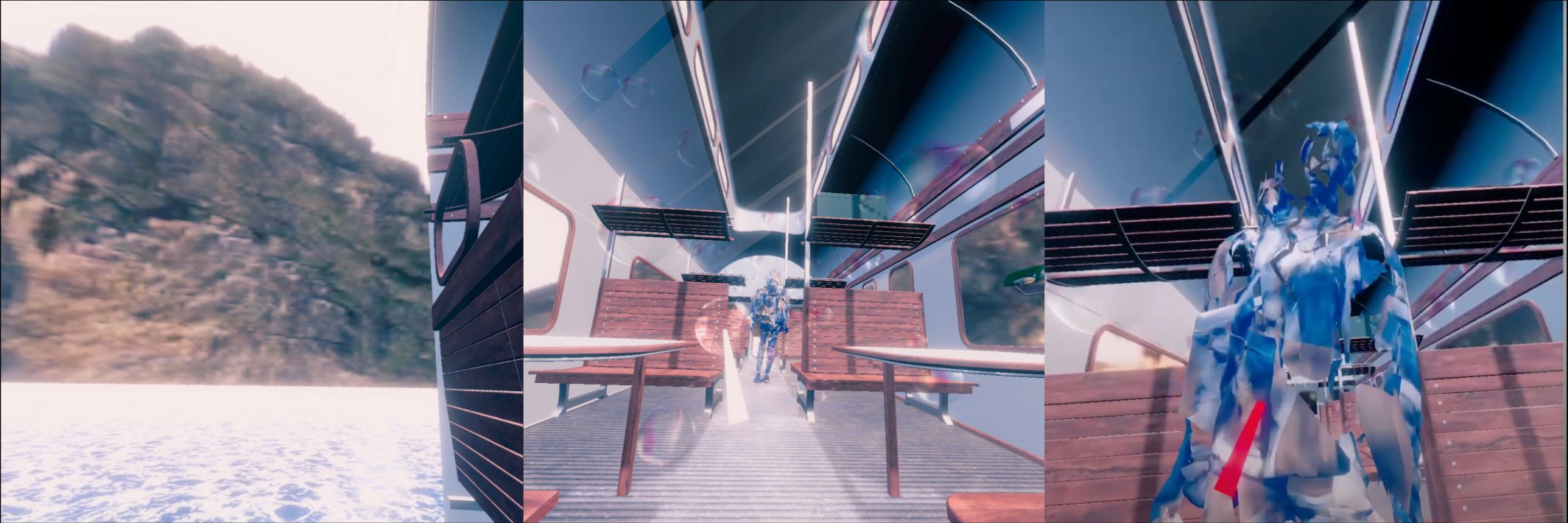

VR interactive work, dimension variable; 2021.

"The most dangerous silence is noise."

- Armin Wiebe

What you hear cannot be undone, just as what you’ve done cannot be altered. Our lives are inundated with noise, from sounds we don’t want to hear waking us up, to fake news we don’t want to pay attention to, from rumor and gossip beyond our control or ability to explicate, to misinformation designed to trigger our behaviors. To show the way noise disguised as real information impinges on our consciousness, we created a narrative intervention that follows our consciousness taking stock of our dreams and ideas as the metaphor of a train traveling through a landscape of machine-learning generated landscapes and machine-learning sorted voices. The journey is based around the arrival of the train at a location dreamt up by the machine as a cross between Rueon, France, and Yokohama, Japan, where a person we have known all our lives stands up and exits the train. That character contains the characteristics of multiple people in our lives, just as the landscape transitions between many places we know by an exploration in machine-learned latent spaces. Voices and sounds are interspersed in each location using spheres of deformations of space in VR, and exploring them triggers spatial audio that is grouped using a machine learning algorithm. Together the visual and audio exploration narrates the scenario of the arrival of the train, where our intimate character steps off without saying goodbye, while we summon up our resolve to create an environment to discredit the information source, to finally say goodbye ourselves, to turn off the sound.

See our paper published at EAI ArtsIT Conference on interactivity and game creation, “SOUND OF(F): Contextual storytelling using machine learning representations of sound and music”: Erol, Zhang, Ozgunay & LC (2021). SOUND OF(F) was exhibited at I Was of Three Minds at JCCAC, and as part of I’m Always Here at Osage Gallery Hong Kong.

In dreams, one’s life experiences are jumbled together, so that characters can represent multiple people in your life and sounds can run together without sequential order. To show one’s memories in a dream in a more contextual way, we represent environments and sounds using machine learning approaches that take into account the totality of a complex dataset. The immersive environment uses machine learning to computationally cluster sounds in thematic scenes to allow audiences to grasp the dimensions of the complexity in a dream-like scenario. We applied the t-SNE algorithm to collections of music and voice sequences to explore the way interactions in immersive space can be used to convert temporal sound data into spatial interactions. Applying it to a Virtual Reality (VR) artwork about replaying memories in a dream, we found that audiences can enrich their experience of the story without necessarily gaining an understanding of the artwork through the machine-learning generated soundscapes. This provides a method for experiencing the temporal sound sequences in an environment spatially using nonlinear exploration in VR.

The inspiration for the work comes from a dream where someone close to the dreamer exited the train at her exit without ever saying goodbye. The dreamer then wakes up to find that he has no recollection of the exact person involved but rather a continuous fusing of one person’s face with another person’s personality, the product of a dream that, like machine learning models, fuse together personalities in creating characters and landscape. The character is intended to have within a single person the characteristics of multiple people in our lives, just as the landscape transitions between many places we know.

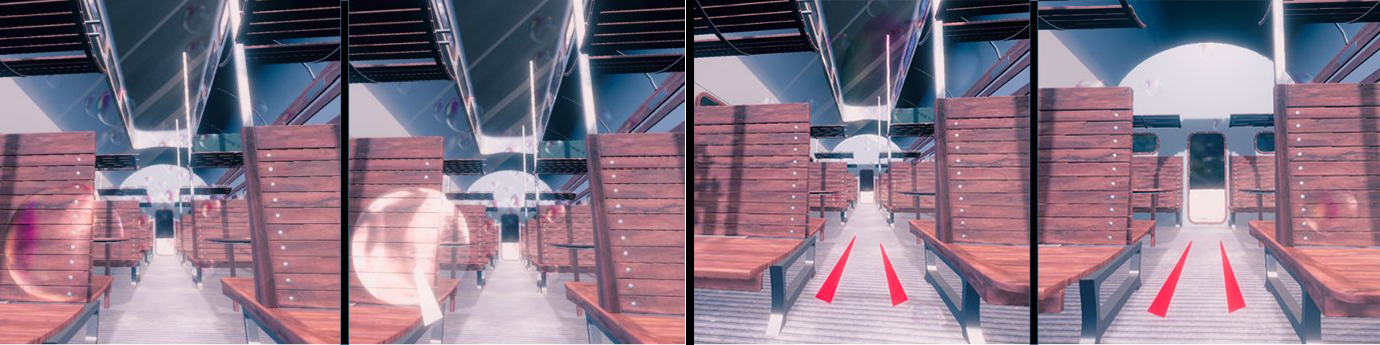

The experience takes around five minutes and loops repeatedly. The audience begins inside a train where she can move around using the joystick in the controller but cannot exit the train entirely. The train is going nowhere and everywhere. Noises in the form of music and sounds selected for each theme and clustered by t-SNE are found in the environment. They may be sounds that we don’t want to hear waking us up, or fake news that we don’t want to pay attention to, or misinformation designed to trigger our behaviors, but each set of sound revolves around a theme. The audience can listen to the spatial audio presented as translucent spheres in the scene grouped by t-SNE so that similar-feature audio clips are close to each other in space. The sounds are played back (while the sphere colors change) when you hover the mouse over individual clips. Audiences can feel like they are inside sound sequences, with the ability to explore them spatially rather than passively listen to them temporally.

The sounds for each thematic scene consist of sets of both music and sound recording fragments. The themes, presented in order, are: Goodbye, Hope, Longing, Misunderstanding, and finally Silence, each with its own associated character animation of a different way for the character to leave the train. For the Goodbye theme, we used Hiroshi Sato’s Say Goodbye and the goodbye final scene from the movie Casablanca where the main characters go their separate ways at when Ilsa boards the plane. Both are nostalgic and have different approaches to say goodbye: one is hopeful and the other one is dramatic and sad. For the Hope theme, we used a sound recording of a song sung by local Hong Kong people and Martin Luther King’s “I have a dream” speech, which is inviting the dreamer to hope for future. For the Longing scene, we used the song Apo Mesa Pethamenos and the dialog from the car scene in the movie Before Sunset, both of which are about longing for someone, focusing after a breakup. For the Misunderstanding theme, we used Animals’ song Don’t let me be Misunderstood and the movie The Switch that puts the audience inside a fight. The songwriter shouts out about how everybody doesn’t understand him and in the movie we hear one couple’s dialog consistently fail to understand each other. In this scene, we also change the perspective so that the audience is looking at the character and sound bubbles from above, narrating the misunderstanding idea. For the Silence theme, we leave it to similar sounding meditative sounds. This is the literal “sound off” for all noises as well as a goodbye to our character, who now stands outside the train for the first time while the train has stopped moving and the landscape outside has stopped moving. After our intimate character has repeatedly stepped off the train without saying goodbye, we ourselves finally say goodbye ourselves, in order to turn off the sound.

In terms of the context, the train is running on a moving ocean. The interior decoration of the train is nostalgic and given bloom effects to emphasize the dreamy scene. As the train moves, the view outside of the windows will transit between many places seamlessly. The landscape skybox is a looping 360 video generated by state space traversal through a StyleGAN2 machine learning model trained using 478 total 360 degree photos taken by the authors at local landscape locations. As the audience walk in the train, turn around, look out of the windows, and explore the spatial sounds grouped by t-SNE using the controller, they find the ever-changing character on the train that acts on her own to leave the train. Everytime she leaves, the scene transitions to the next segment. The previous character that stepped off the train without saying goodbye is replaced by the same character at a different position. The audience is also relocated to a different location of the train, while the sound bubbles are updated.

This work is run on Oculus Quest 2 on a loop. The headset should be powered when not in use, through a cable that runs through a hole in the plinth. The controllers are hung on rails drilled into the plinth when not in use. The staff instructs the viewer to put on the headset and simply give them the controllers. The only controls possible are moving around in the space using the joystick and pointing at the sound spheres in the environment. All other buttons have been disabled. To start the first time, the staff runs the program inside Unknown Sources, which has been uploaded to the headset ahead of time. The location of the plinth and power cable is up to the curator, but is preferred to be close to the electrical outlet. The audience may experience the work either standing or sitting. In our experience the motion sickness of this work is minimal due to the lack of jarring movements, so they may enjoy it while standing. Optionally, corded headphones can be connected to the Oculus device if the noise in the environment is too loud. If the headset speakers are turned to maximum, usually headphones are not needed.